We've covered a variety of energy technologies.

Now, let's focus on the few that are truly primed to define the next few decades of the energy industry.

We'll start by looking at trends and recent breakthroughs in the most important energy production, storage, and distribution technologies to see how they will alter our energy infrastructure.

Then, we'll look at how the future of energy demand is evolving with the development of digital intelligence.

Hybrid Solar

Fossil fuel reserves are finite and depleting quickly. Even ignoring emissions, humanity has only a few hundred years remaining before it will be forced to transition to more abundant energy sources.

Out of all the alternatives we considered, we saw that solar is the only one with the ability to fully cover our growing energy demands with existing technology.

Every second, the sun supplies our planet with >10,000x more energy than current global demands. In order to power the whole world with sunlight, we would need to cover <0.4% of land with solar panels.

Constant innovation in photovoltaics and lithium-ion batteries over the past few decades has brought us to a turning point where hybrid solar plants using collocated solar panels and grid storage may be primed to supply a much larger share of global electricity generation.

Let's look at what trends tell us about this phenomenon.

hybrid solar farm in the Mojave Desert

Photovoltaics

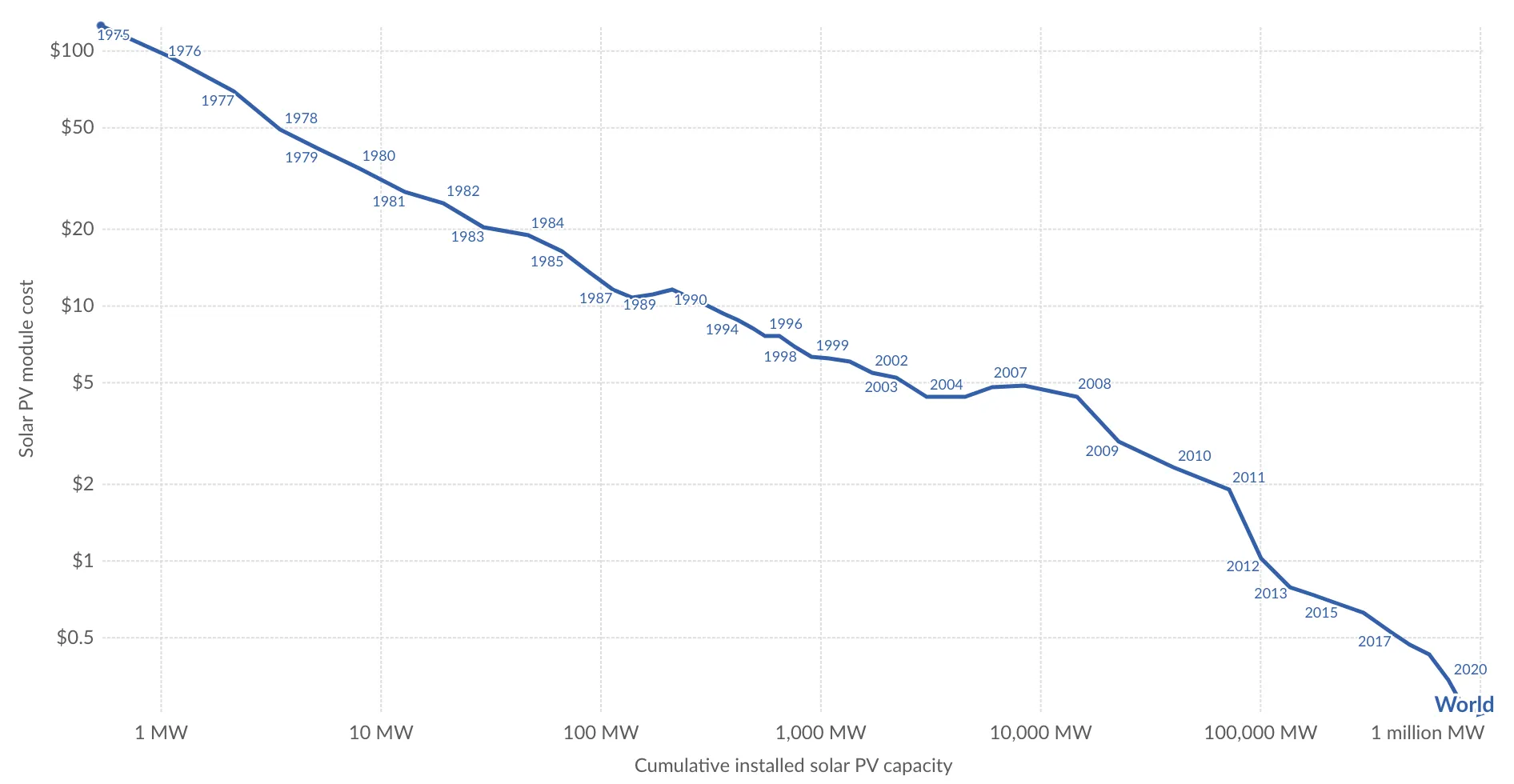

Innovations in solar photovoltaic technology have driven down the cost of solar energy from $100/W in 1976 to <$0.3/W in 2020, accounting for a 300x cost decrease over 40 years.

During this time period, the total installed capacity of PVs has grown from <1 MW to >1,000,000 MW.

solar module cost and installed capacity[1]

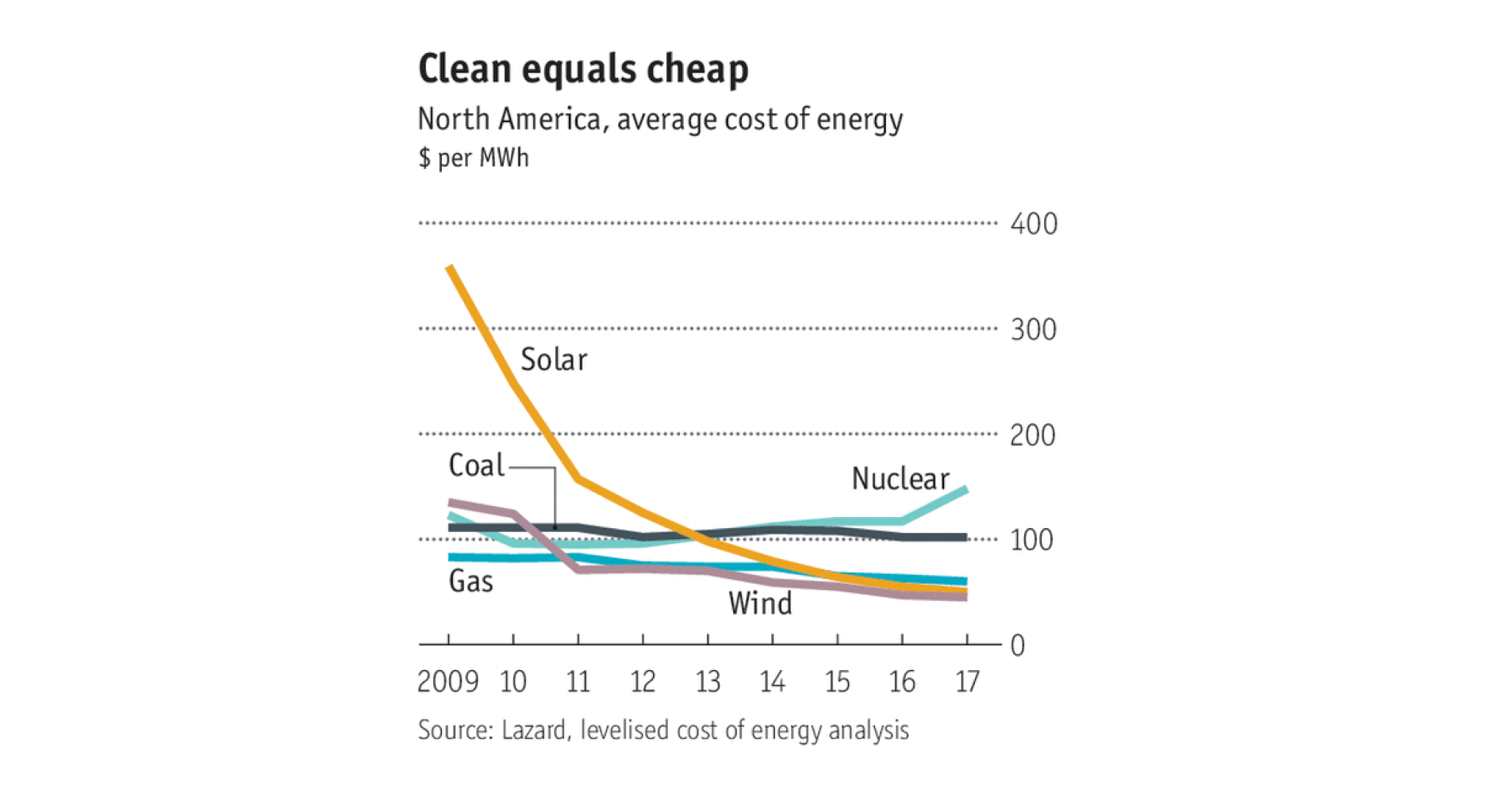

Because of this trend, solar has now gone from being prohibitively expensive to being one of the cheapest energy sources, rivaled only by wind in cost.

solar cost of energy rapidly decreasing[2]

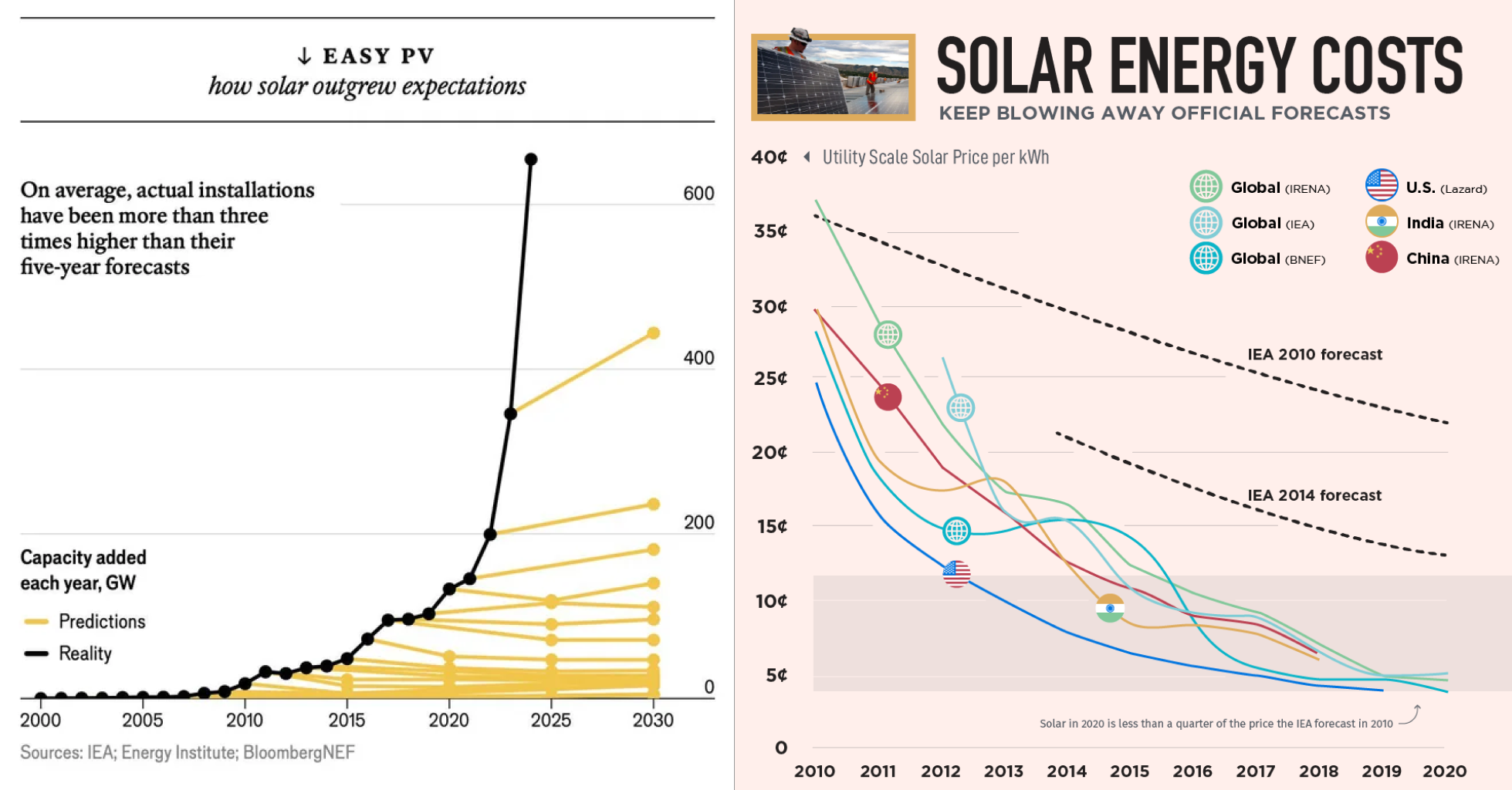

The rate of progress in solar PV innovation has been unexpected. This is reflected in the fact that solar has consistently beat cost and installed capacity projections over the past 20 years.

projections for solar installed capacity and cost have been consistently wrong[3]

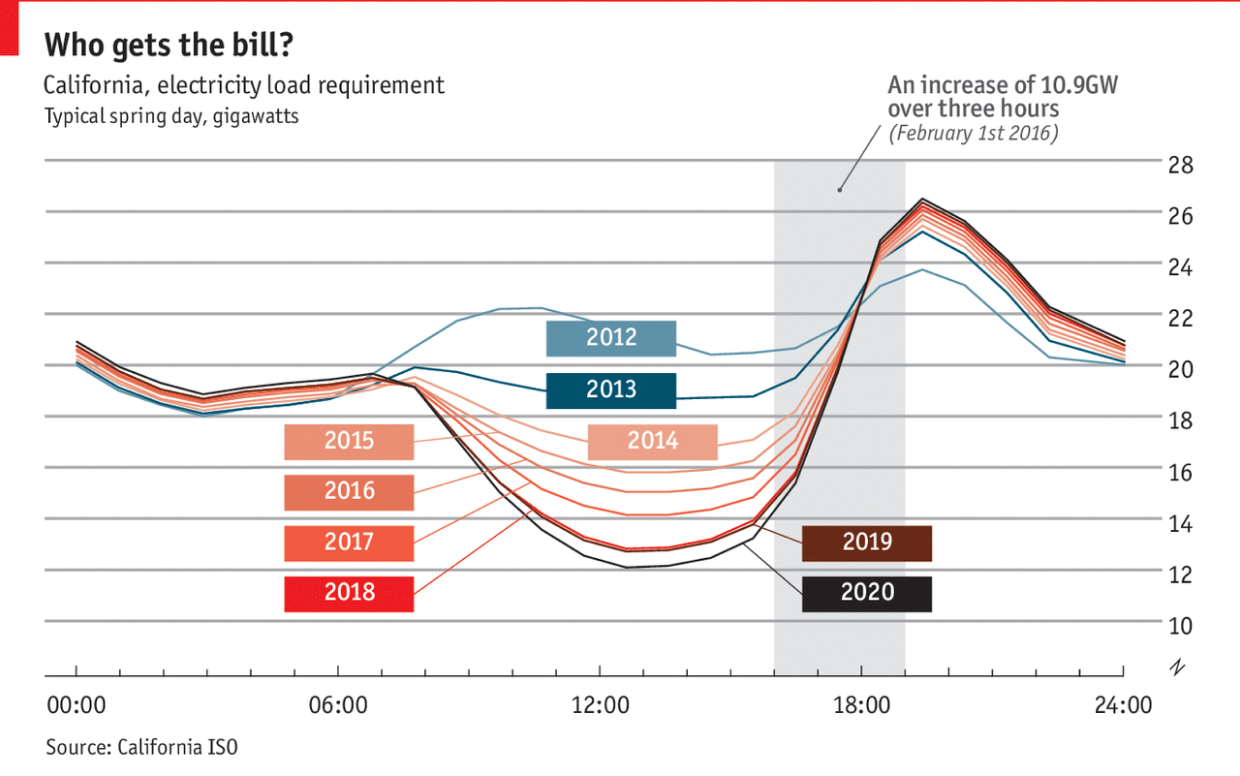

One of the main obstacles in the way of more widespread solar adoption was its intermittency and suboptimal production time during the lowest demand hours of the day.

This has created the solar duck curve we discussed in Part IV, which puts extra pressure on the grid to quickly ramp up generation capacity before peak hours. This is costly and makes grid balancing challenging.

suboptimal solar generation time creating the duck curve[5]

Lithium-ion Batteries

Grid storage is the solution to this solar intermittency problem. Pumped hydro has helped with this in the past, but viable locations for pumped hydro plants are rare, and these facilities are expensive to construct.

Declining lithium-ion battery costs and increasing energy densities has made them the optimal technology for grid storage.

Lithium-ion batteries use of cheap and light materials and intercalation has led them to lower costs and higher energy densities than other batteries.

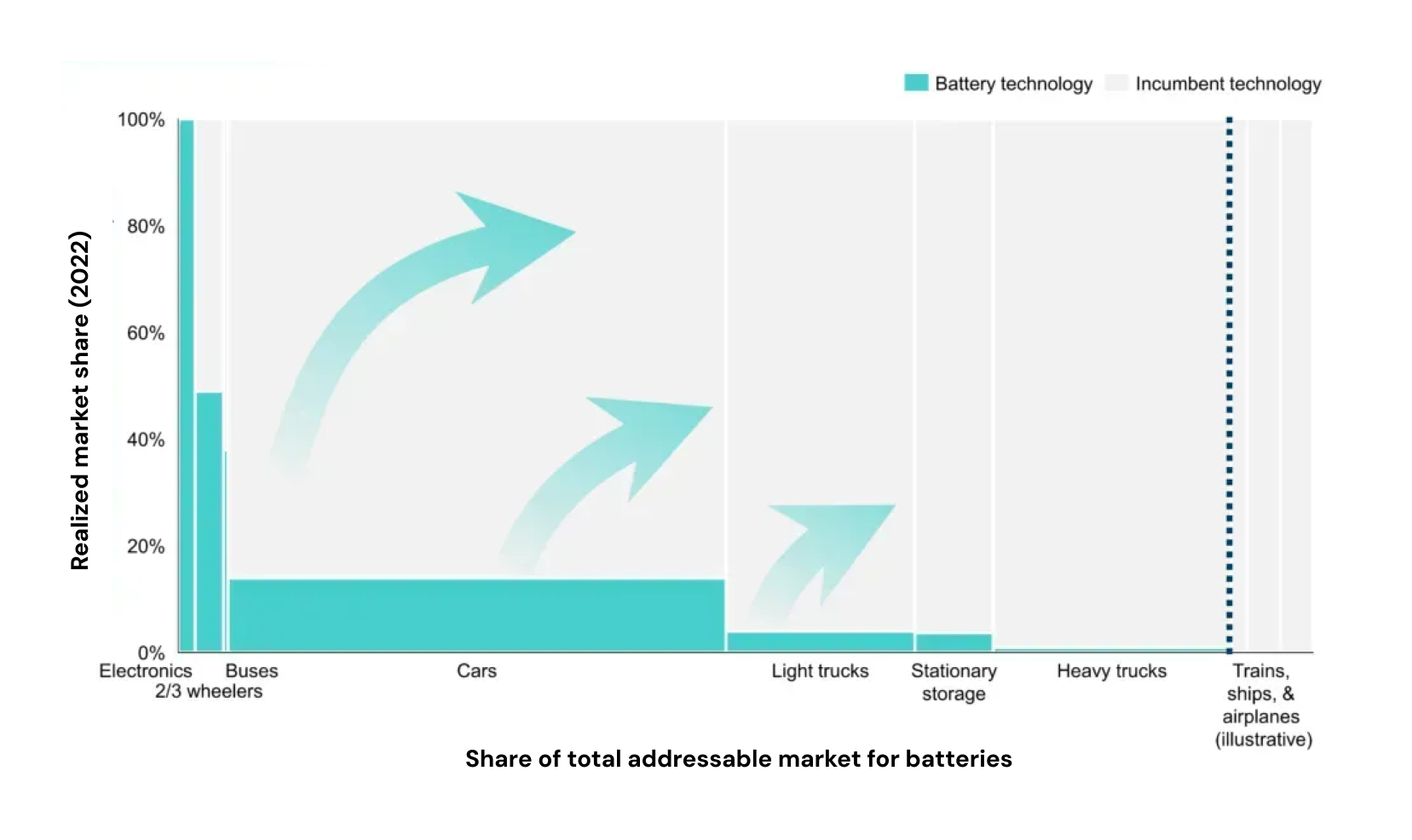

Over the past 40 years, lithium-ion battery adoption has expanded into new industries in a domino effect where innovations driven by revenues from one industry bring costs and energy densities to a level that unlocks new market opportunities in another industry.

This started with personal computing devices, which brought down costs enough to make electric vehicles viable, which then brought down costs enough for grid storage to now be viable.

domino effect of battery adoption[6]

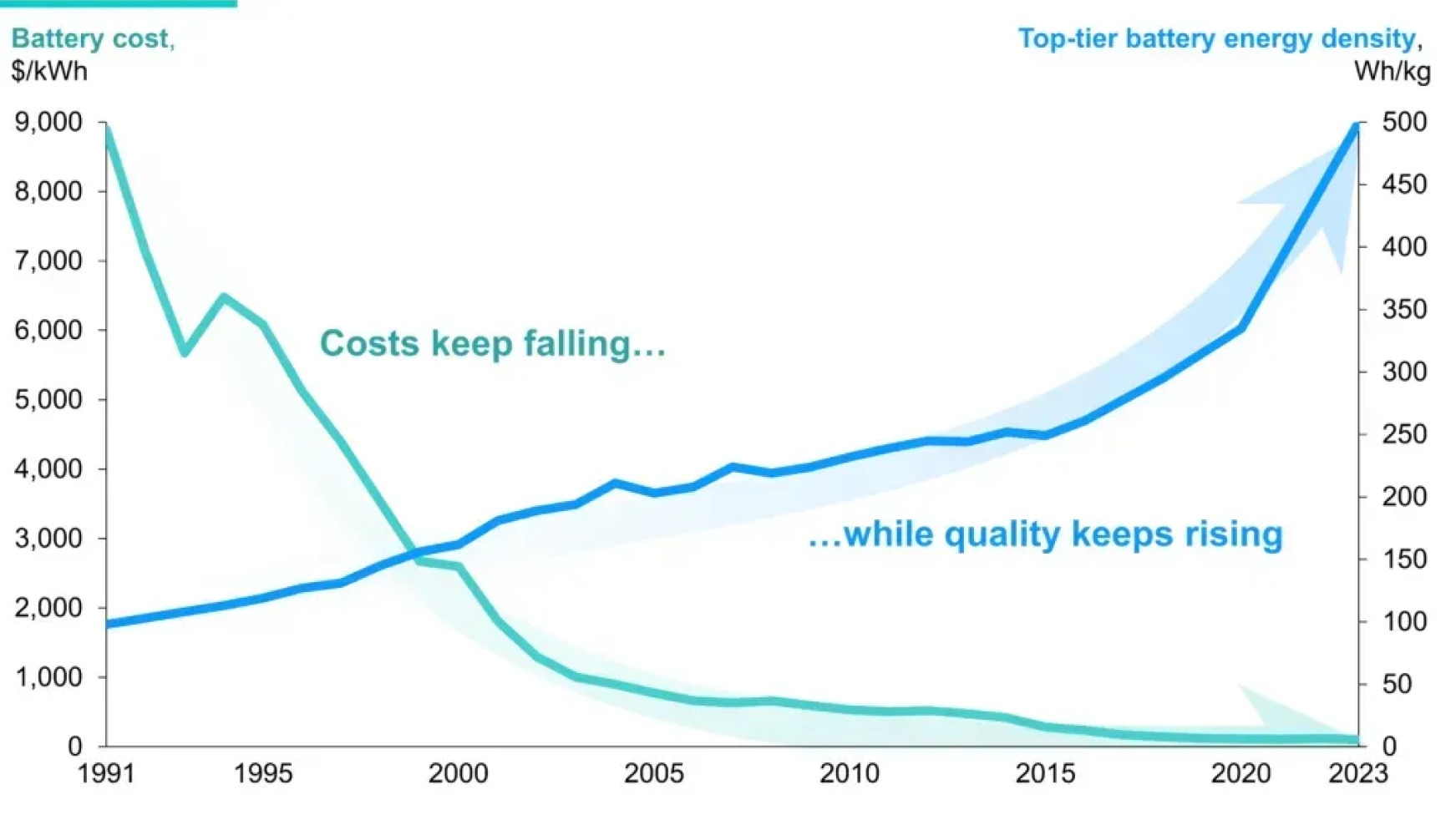

Because of this process, the development of new battery chemistries has driven down the cost of battery storage from >$9000/kWh in 1990 to <$150/kWh in 2024 for a >60x price reduction. In the same time period, energy densities have increased by >5x.

battery costs have decreased rapidly while energy densities have increased rapidly

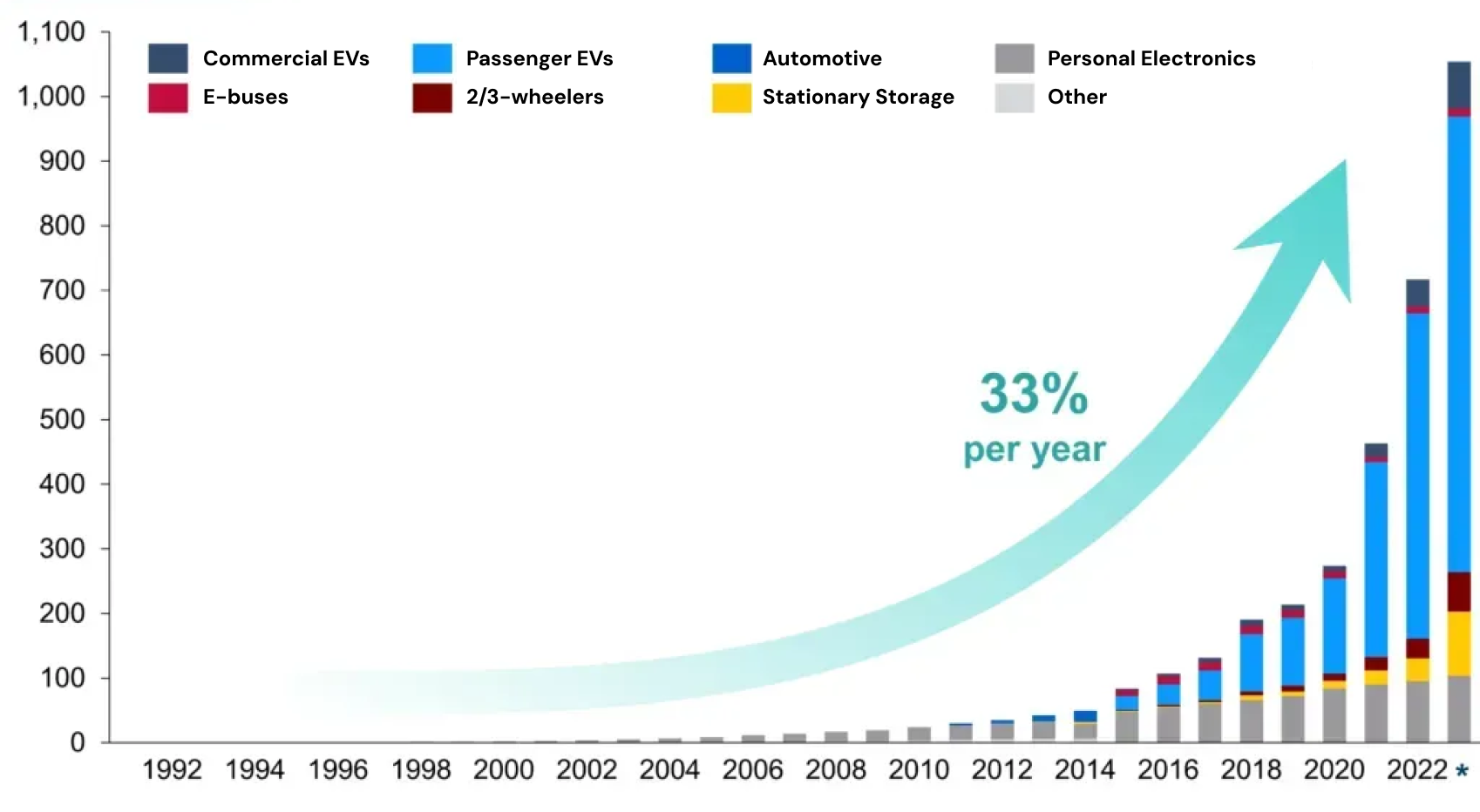

These innovations have driven rapid adoption as demand for batteries has increased exponentially in this time period.

In the graph below, we can see the exponential growth in overall battery demand, as well as the contribution by newly unlocked industries, with a clear transition point for automotive EV battery needs (blue) in 2010, and the beginning of grid storage demand for batteries (yellow) around 2015.

battery costs have decreased rapidly while energy densities have increased rapidly[6]

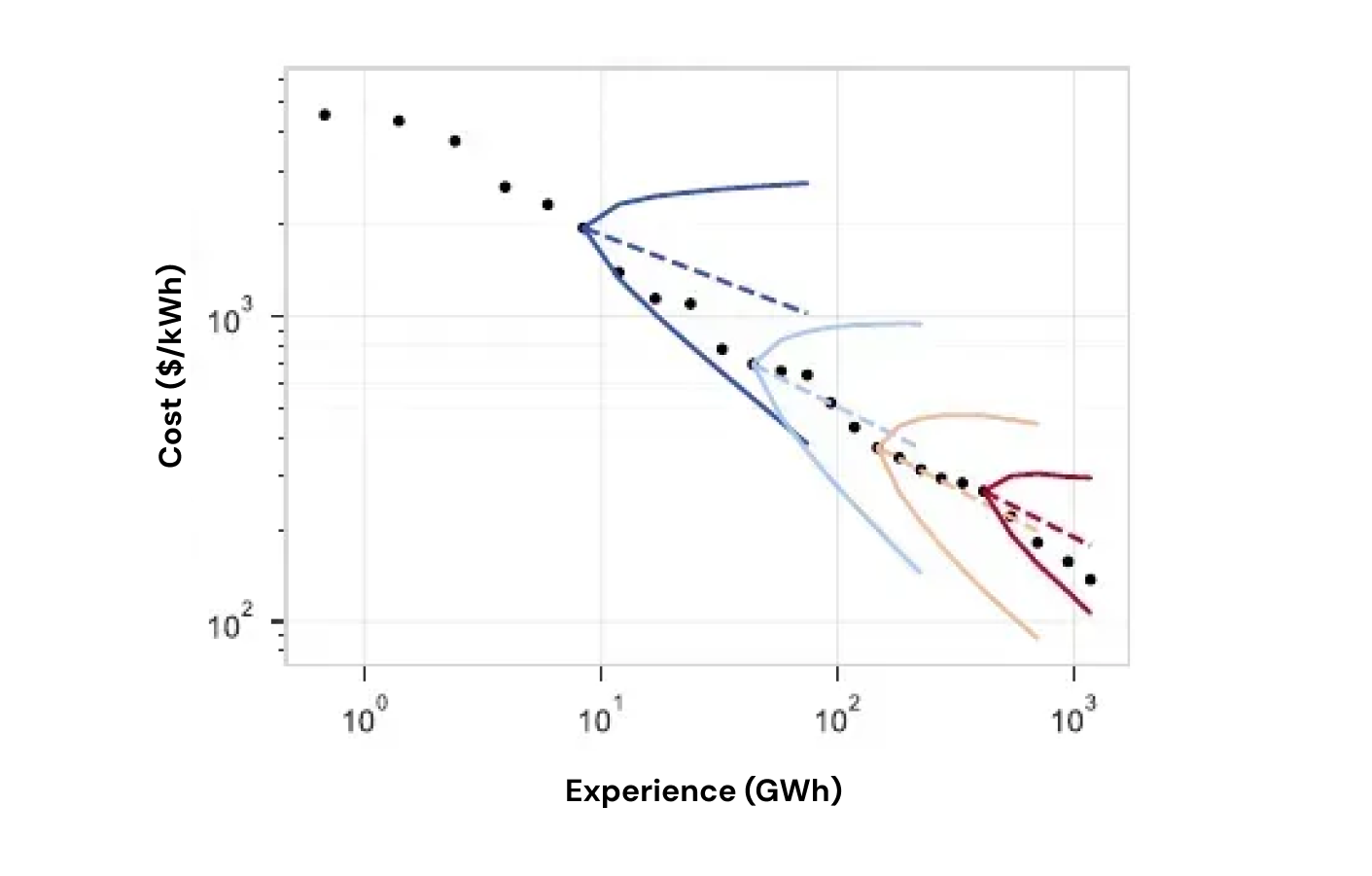

Looking at the relationship between battery cost improvements and total experience manufacturing batteries, we see that battery prices tend to decrease by 25% for every 2x increase in production output.

battery costs vs. experience (1996-2020)[7]

How long can this trend continue?

We've now reached the point where LFP battery costs are rapidly approaching the cost of the lithium used in the batteries, meaning that further LFP price declines will soon be constrained by materials costs.

However, new chemistries make further cost decreases likely. The adoption of sodium-ion chemistries will make batteries even cheaper, since sodium is 30x cheaper than lithium.

If we assume a similar rate of improvement for sodium-ion batteries that lithium-ion saw, these batteries could cost ~$10/kWh by 2028, compared with the current ~$150/kWh costs of lithium-ion batteries.

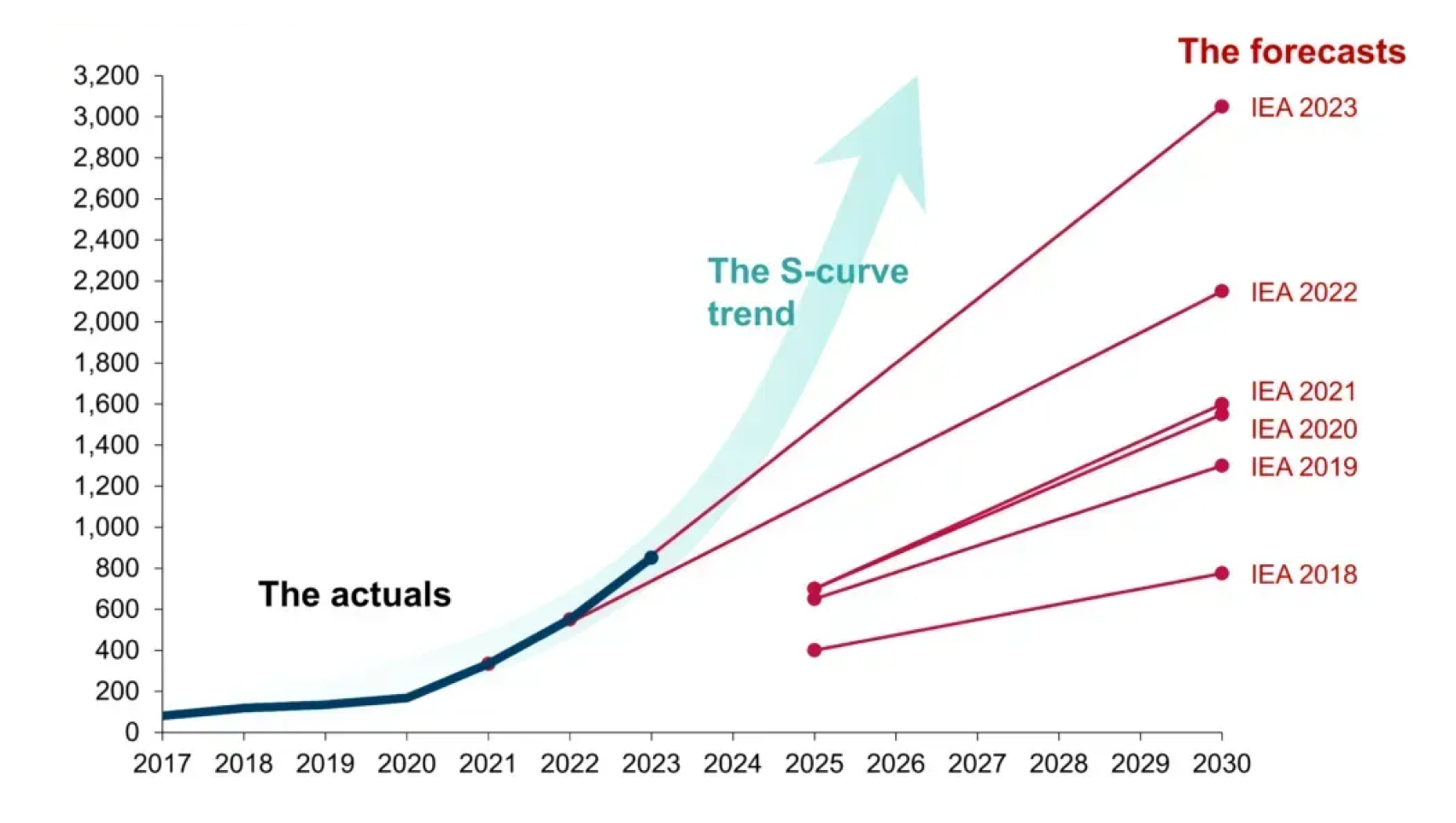

Like solar, battery adoption forecasts over the past decade have also consistently underestimated the rate of progress.

battery predictions have consistently underestimated adoption[6]

Aside: Why are solar and battery innovations happening so fast?

We've seen exponential cost and efficiency improvements in both photovoltaics and lithium-ion batteries over the past 40 years. Why have these technologies progressed so much faster than almost everything else in energy?

The answer is manufacturing.

Building things in factories gives you a point of leverage to rapidly innovate.

Incremental improvements to production processes accumulate, driving down costs and improving product qualities at scale.

When this is combined with increasing revenues from a growing industry at the start of major secular tailwinds, increasing investment in R&D compounds the gains from this process by further improving production and revenue, leading to a runaway innovation curve.

We saw this same effect in the original expansion of the automotive industry with the invention of the assembly line, and the exponential growth of the semiconductor industry with the constant innovations made to fabrication facilities.

Other energy generation methods that rely on large power plants and one-off construction can never see price decreases from these types of industrial process improvements.

We'll see that this insight has driven recent developments in the nuclear industry as well with the creation of small modular reactors.

All of these improvements have made lithium-ion batteries good enough for grid storage.

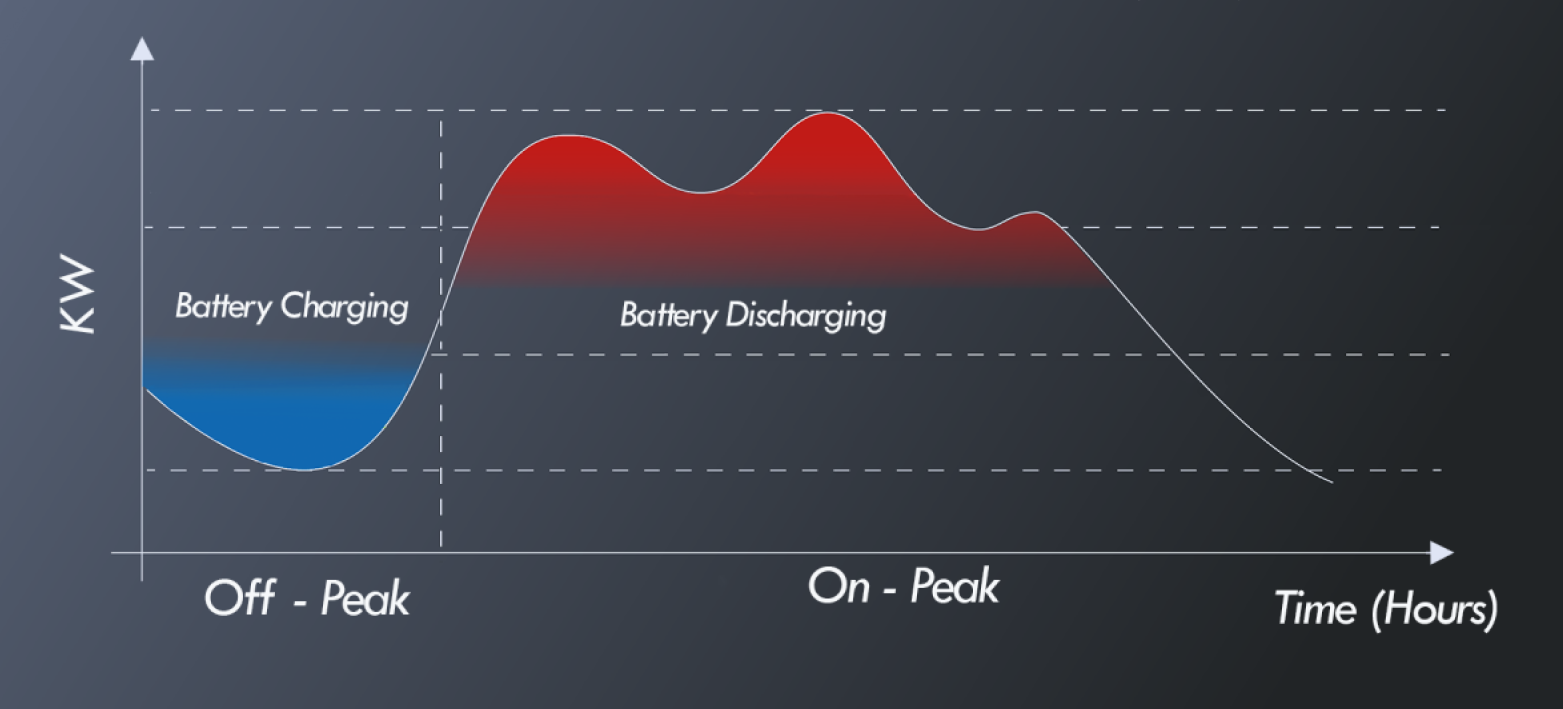

Like other grid storage systems, battery storage has helped with maintaining reserve capacity on the grid and peak shaving, where excess electricity is stored at times of low demand and released during peak demand to reduce the strain on grid generation facilities.

batteries discharge during peak demand hours to ease generation needs

Grid storage systems are especially useful for balancing renewable intermittency problems, given their low cost, ease of construction, and fast charging and discharging.

Solar + Storage

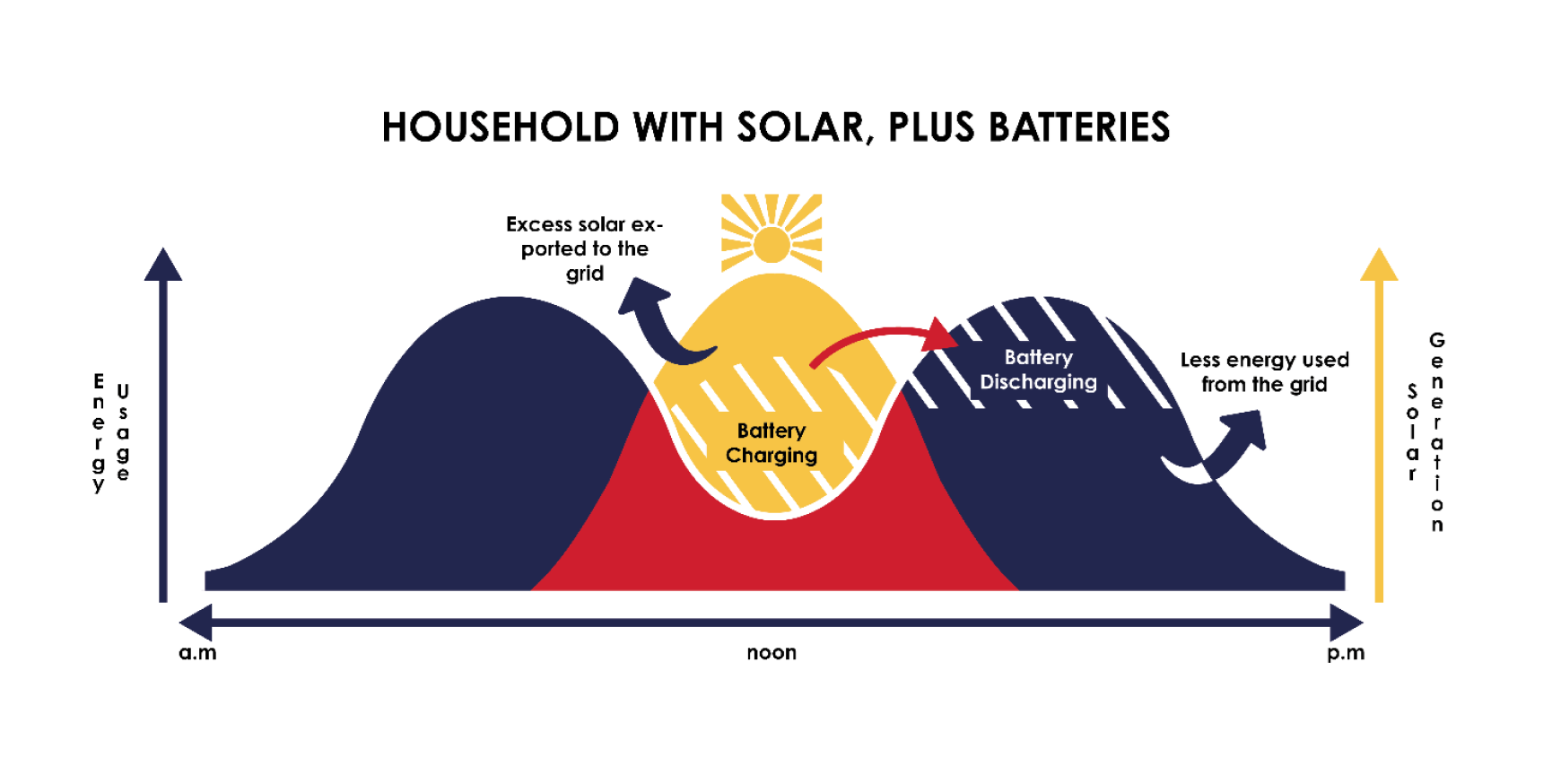

Collocating solar photovoltaics and battery storage systems allows hybrid solar plants to shift solar electricity generation, which is strongest during the low demand hours of the day, to be deployed during high demand hours.

For residences with hybrid solar setups, this shifts their energy demand to become less dependent on the grid.

residential demand shift due to hybrid solar

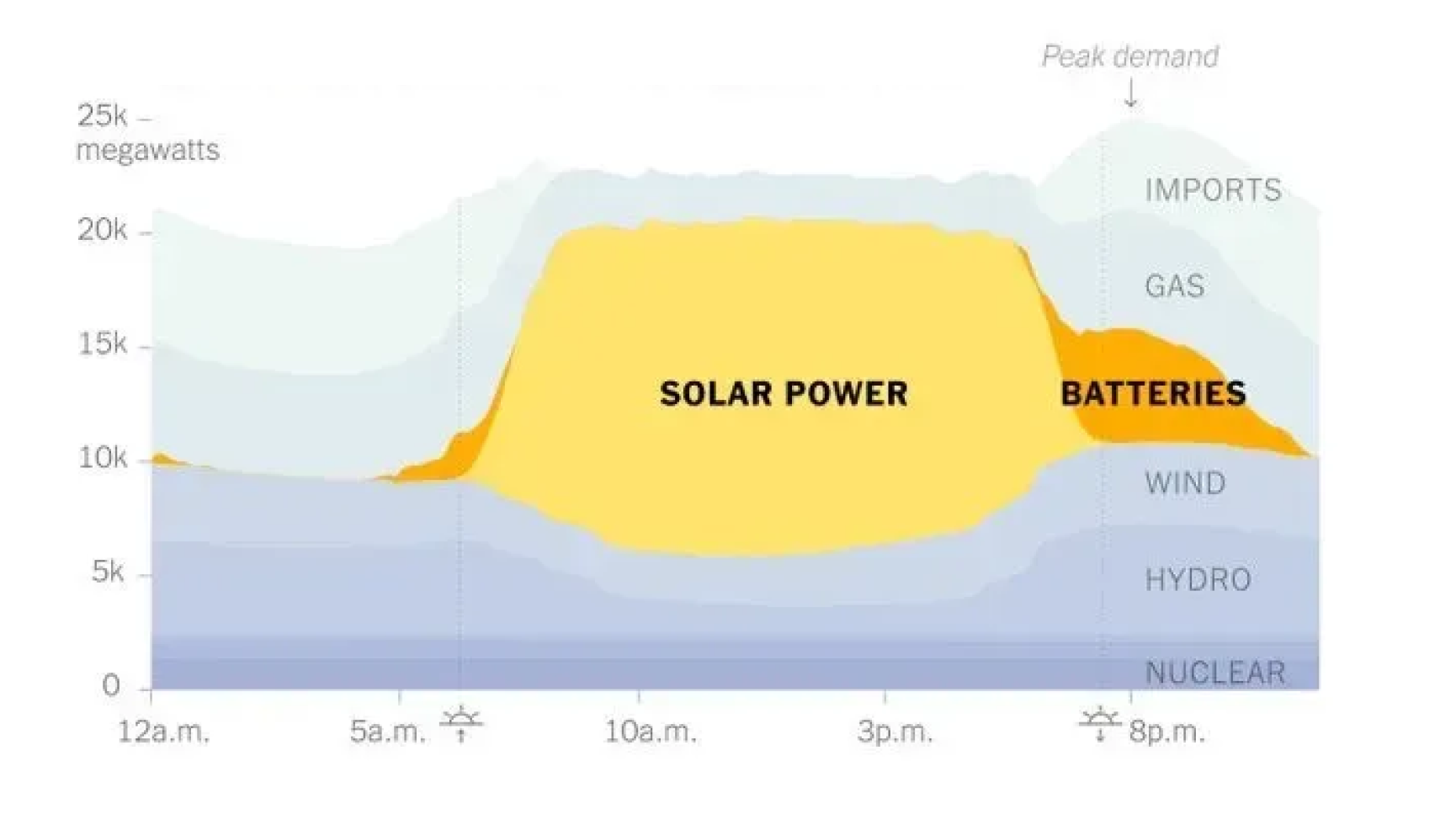

At a grid scale, hybrid solar plants allow for the deployment of cheap solar energy at peak hours, bringing down the cost of electricity. We can see this already in effect in California, with a substantial portion of solar energy being discharged by batteries during peak hours.

California power sources for an average day in April 2024[8]

As the costs of photovoltaics and batteries are decreasing, larger scale hybrid solar plants are being planned around the world.

For example, the largest hybrid solar plant in the US came online in 2024 in the Mojave Desert in California. This project uses >120,000 lithium-ion batteries and >2,000,000 solar PVs to provide 875 MW of power and 3.287 GWh of storage capacity. This is enough power to supply >600,000 homes.

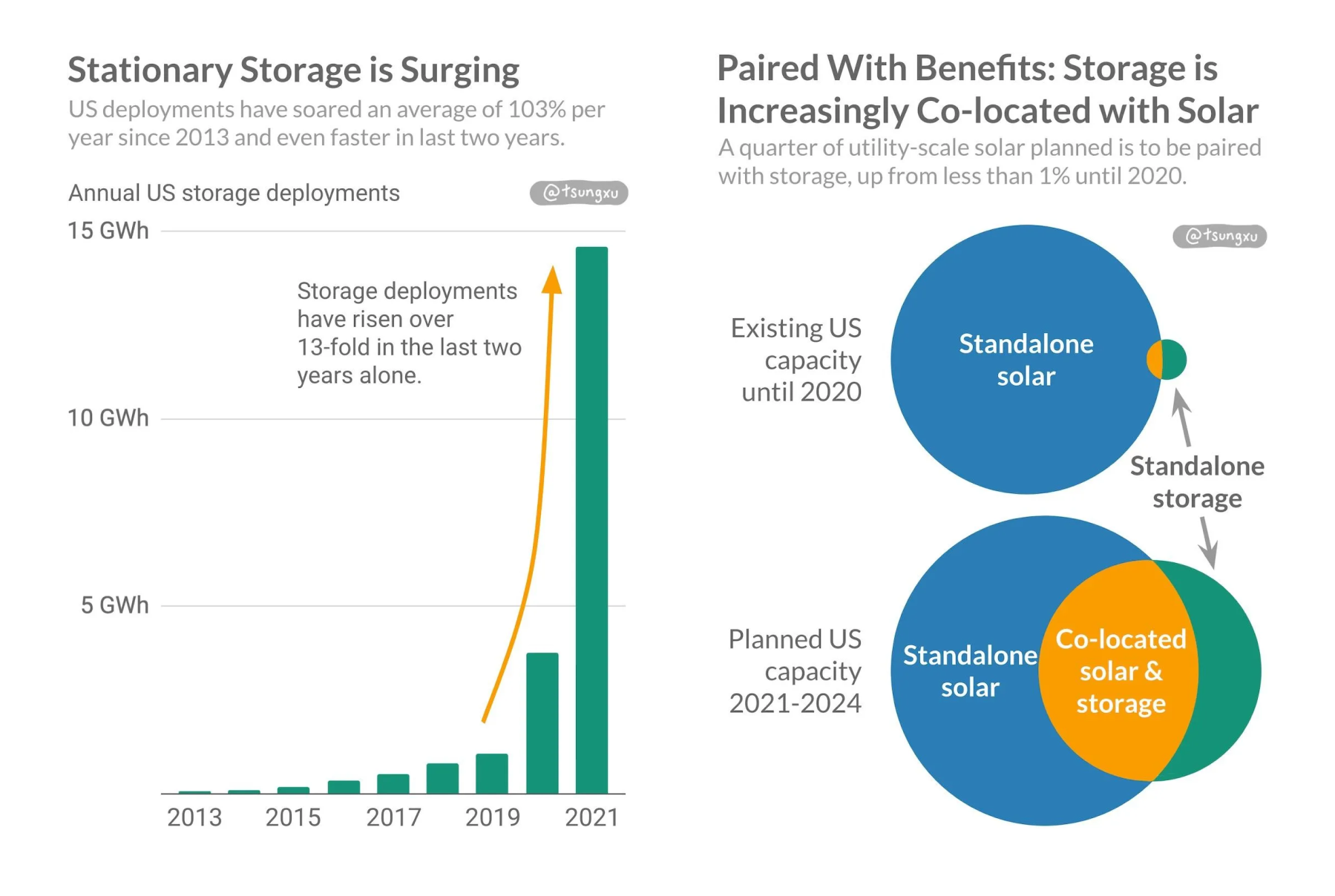

With obvious cost advantages and the relative ease of constructing hybrid solar plants, demand for battery grid storage systems increased by 13x in just the period from 2019-2021 alone.

demand for battery grid storage and hybrid solar is increasing rapidly[9]

The one caveat worth noting is that hybrid solar plants aren't yet capable of supporting base-load needs on the grid. This would require 16-18h of storage capacity to discharge solar energy during the darker hours, which isn't yet feasible.

If we do get to this point, solar will be fully able to replace the grid base-load and may become a primary energy source. In the meantime, nuclear may be our best alternative to replace the base-load currently supplied by coal.

Outlook on Hybrid Solar

Solar technology is already working, and solar energy is abundant enough to serve all our needs. We have plenty of space to build new hybrid solar plants, and residential hybrid solar installments are further increasing adoption.

Photovoltaics and lithium-ion batteries are getting cheaper and more energy efficient very quickly, and demand is growing exponentially.

So hybrid solar is clearly primed to take over the energy system within the next decade.

Small Modular Reactors

Nuclear isn't ready for mass adoption in the next decade in the way that solar is. But recent progress in small modular reactors (SMRs) suggests that it has a chance to become a dominant part of our energy system in the decades after.

In Part III, we saw that all the energy we use originated from the nuclear reactions in stars.

While we work toward capturing the energy from our Sun's nuclear reactions with photovoltaics, it also makes sense to try to harness the potential of nuclear power on earth.

Development costs for these projects are large, but unlike other energy sources, nuclear energy is abundant if we can make it work safely at scale.

The Decline of Nuclear

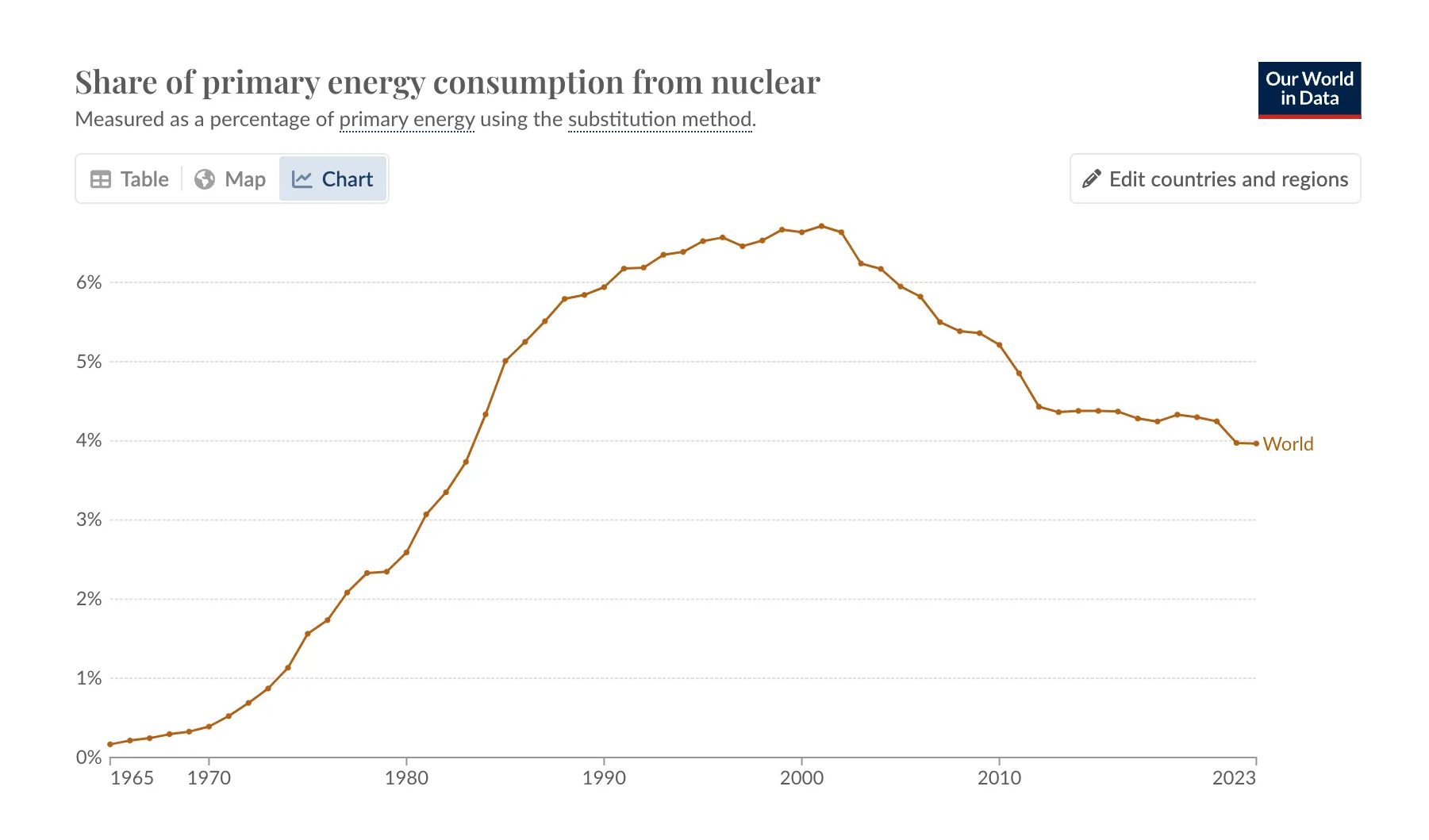

The share of global energy provided by nuclear power plants has declined from ~7% in 2000 to <4% in 2024.

the global share of nuclear energy production is decreasing[10]

This is the result of:

- Damage to the public perception of nuclear after accidents like the Chernobyl meltdown in 1986 and Fukushima accident in 2011.

- Safety concerns that have led to stricter regulations, preventing the development of new nuclear facilities.

- The upfront capital demands and difficulty of building new nuclear facilities are less economically competitive compared with cheaper alternatives like wind and solar.

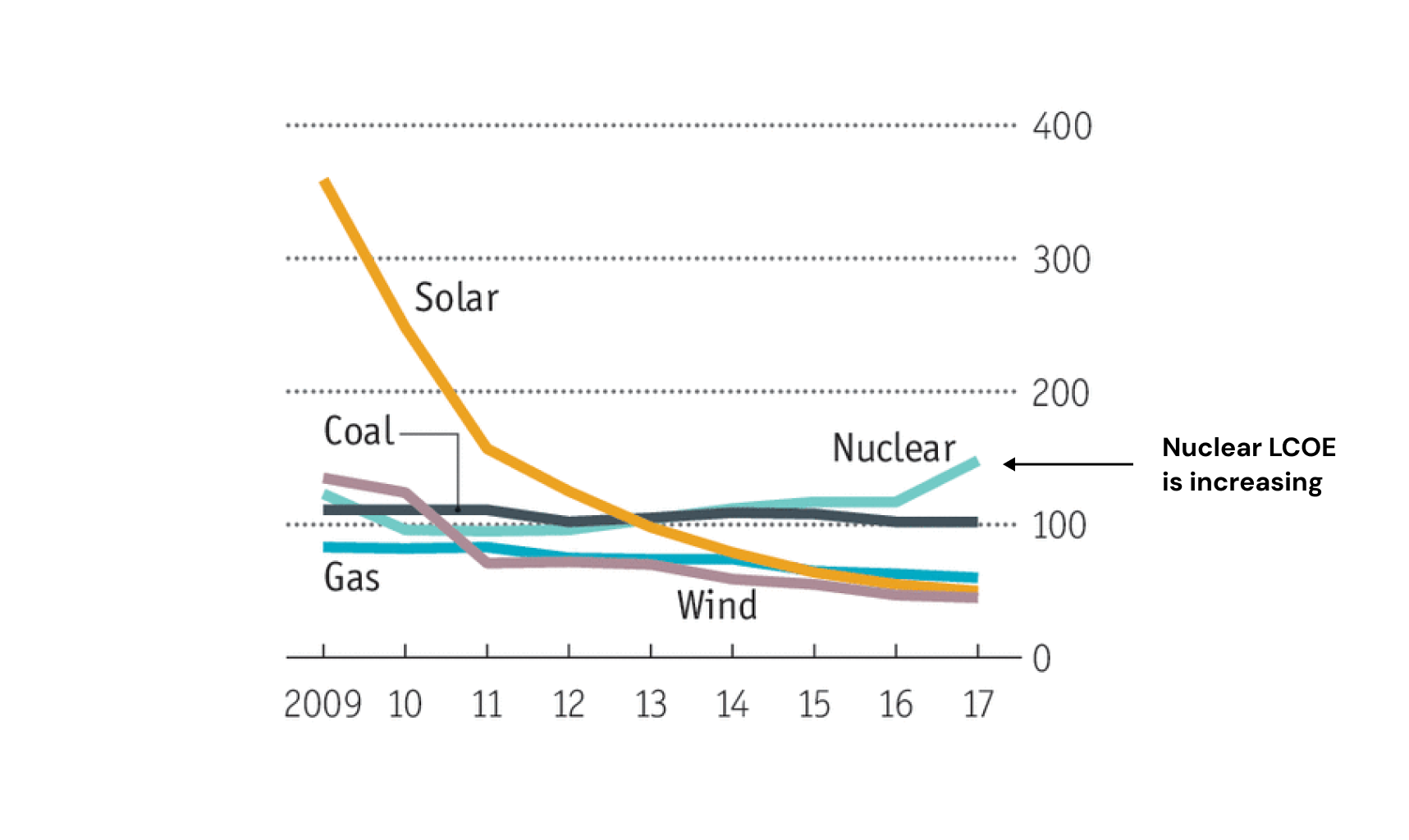

If we look at the energy cost trends by generation source again, we'll see that the cost of nuclear energy has actually been increasing over the past decade.

the cost of nuclear has been rising

The Bellfonte Nuclear Power Plant project in Alabama is the perfect representation of the stagnation of the nuclear industry since the 1950s.

The Bellfonte plant originally started construction in 1975.

Then due to a combination of regulatory challenges, financial constraints, and construction inefficiencies, the project went through a series of delays and stop/restart cycles lasting 40 years.

In 2021, the project was still not complete and had provided no power.

Why SMRs?

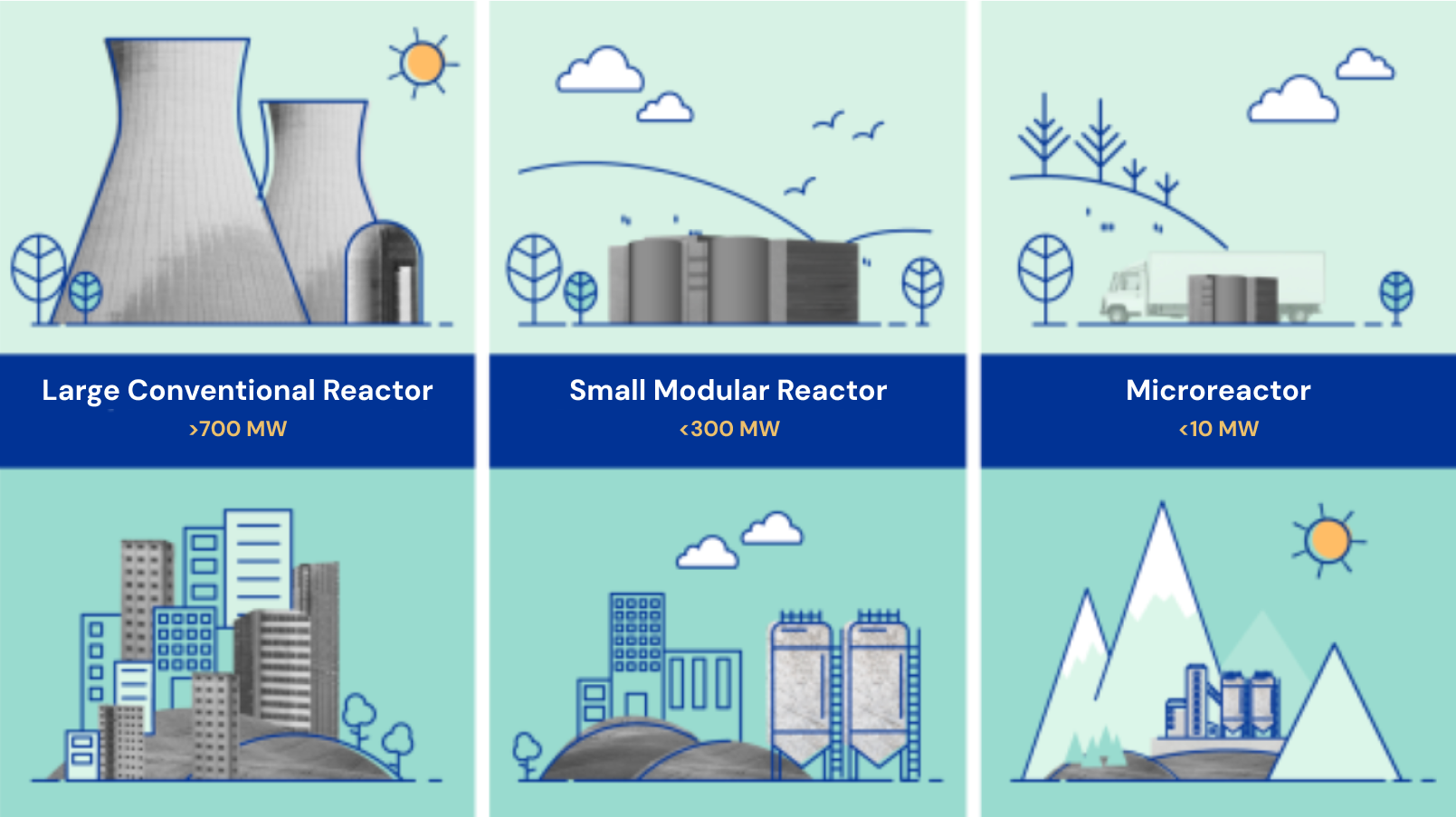

Small modular reactors are defined as nuclear reactors with <300 MW output, compared with the typical 1 GW output of modern reactors.

They're usually built in modules with much less than 300 MW capacity in a factory, and are then transported and assembled on site into larger facilities.

By building modules in factories, SMRs can take advantage of the industrial process efficiency gains that have benefitted solar and batteries.

Constructing an SMR facility takes ~3-5 years, down from the 10-15 years for the previous generation of reactors. Additionally, SMRs often offer a cheaper cost of energy than traditional nuclear facilities.

comparison of different nuclear reactor sizes

NuScale, one of the largest US SMR startups, is building 60 MW reactor modules that cost ~$300 million. This allows for investment in smaller scale facilities, rather than requiring the $5 billion upfront capital expenditure to build a traditional reactor. NuScale says they plan to build a 720 MW reactor for $3 billion, 20% cheaper than larger reactors.

Oklo, another popular SMR startup backed by Sam Altman, is planning to produce an "Aurora powerhouse" reactor module with 15 MW output for $70 million.

Oklo's Aurora powerhouse SMR[11]

As we'll see in the next section, SMRs are also being built with safer designs, making them resistant to meltdowns.

So SMRs are superior to traditional reactors for a few simple reasons:

- Building reactors in modules enables more flexibility for smaller nuclear projects in more remote locations.

- This opens the possibility for lower upfront costs for investors who want to build smaller generation facilities.

- Building reactors in factories leads to efficiency gains that decrease construction times and may further decrease costs in the future.

- SMRs are safer than traditional reactors.

Safety

The safety of nuclear reactors comes down to their cooling systems.

The previous generation of reactors were mainly pressurized water reactors (PWRs) that used water circulating around the reactor chamber to remove excess heat.

The water needs to remain in its liquid state for it to keep carrying away heat efficiently. Pressurized pipes are used to maintain water as a liquid.

If anything happens to heat up water in the pipes enough that it turns to steam, it can lead to a runaway reaction where the steam no longer carries away heat, causing the reactor to heat up more and turn more water to steam.

Eventually, pressure builds up, and a pipe can burst, damaging the nuclear facility and potentially sending radioactive fuel from the reactor core everywhere. This is exactly what happened in Chernobyl.

This type of cooling system used in a PWR is known as an active cooling system since it requires active work to pump water around the reactor and keep it cool. If this active system fails, the reactor is prone to meltdown.

SMRs are shifting toward designs that use passive cooling and lower thermal power in reactor cores.

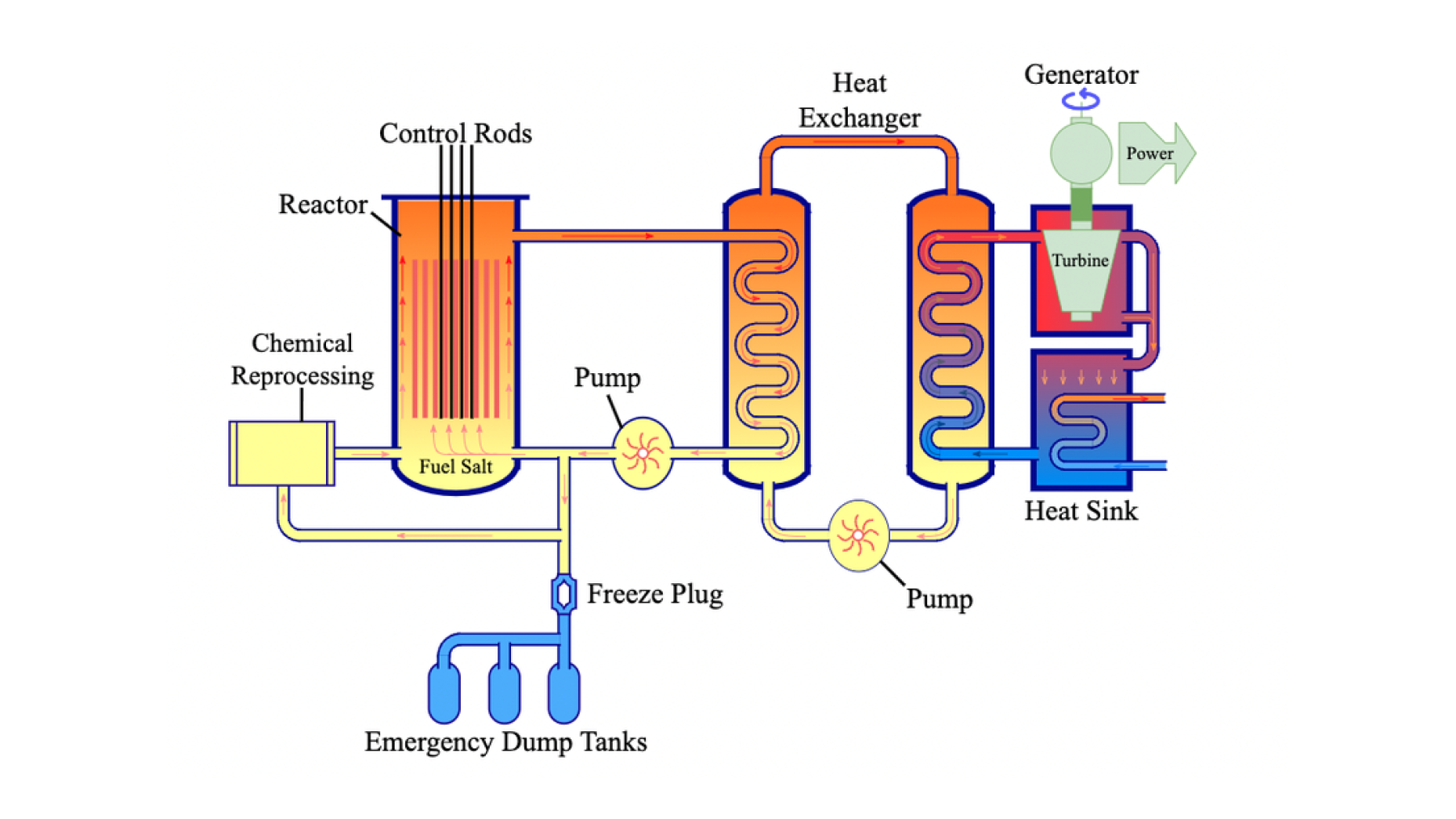

For example, China recently announced plans to build a thorium-breeding molten-salt SMR that is projected to come online by 2029. This reactor is a combination of many innovations.

First, these reactors combine their nuclear fuel and coolant into a single mixture that's circulated through the reactor. The Chinese reactor uses a molten salt fluid with thorium mixed into it.

Fast neutrons released from nuclear fission in this mixture either strike other fissile elements to continue the reaction or strike the salt in the molten salt mixture, transferring heat.

As the fluid moves into the heat exchanger, this heat is transferred to power the steam turbine.

Molten salt has a high boiling point ~1400 °C, so it's difficult for it to turn into gas. Additionally, because the fuel and salt mixture are combined, if there's a leak in the pipe due to high pressure, the fissile material itself will flow out of the pipes, preventing runaway nuclear reactions.

These reactors also use freeze plugs which block access to underground tanks that could hold all the circulating fluid. If the nuclear fuel gets too hot, the freeze plug will automatically melt and the fuel mixture wil dump into these tanks, preventing further reactions.

the structure of a molten salt reactor[12]

If successful, the Chinese molten-salt reactor will also be the first reactor to use thorium breeding. In Part III, we saw that this is a much more abundant nuclear fuel source than the Uranium-235 used by most reactors.

China has enough thorium reserves to power itself with these reactors for >20,000 years. As part of their belt-and-road initiative, they plan to sell 100 MW thorium-breeding SMRs to other countries.

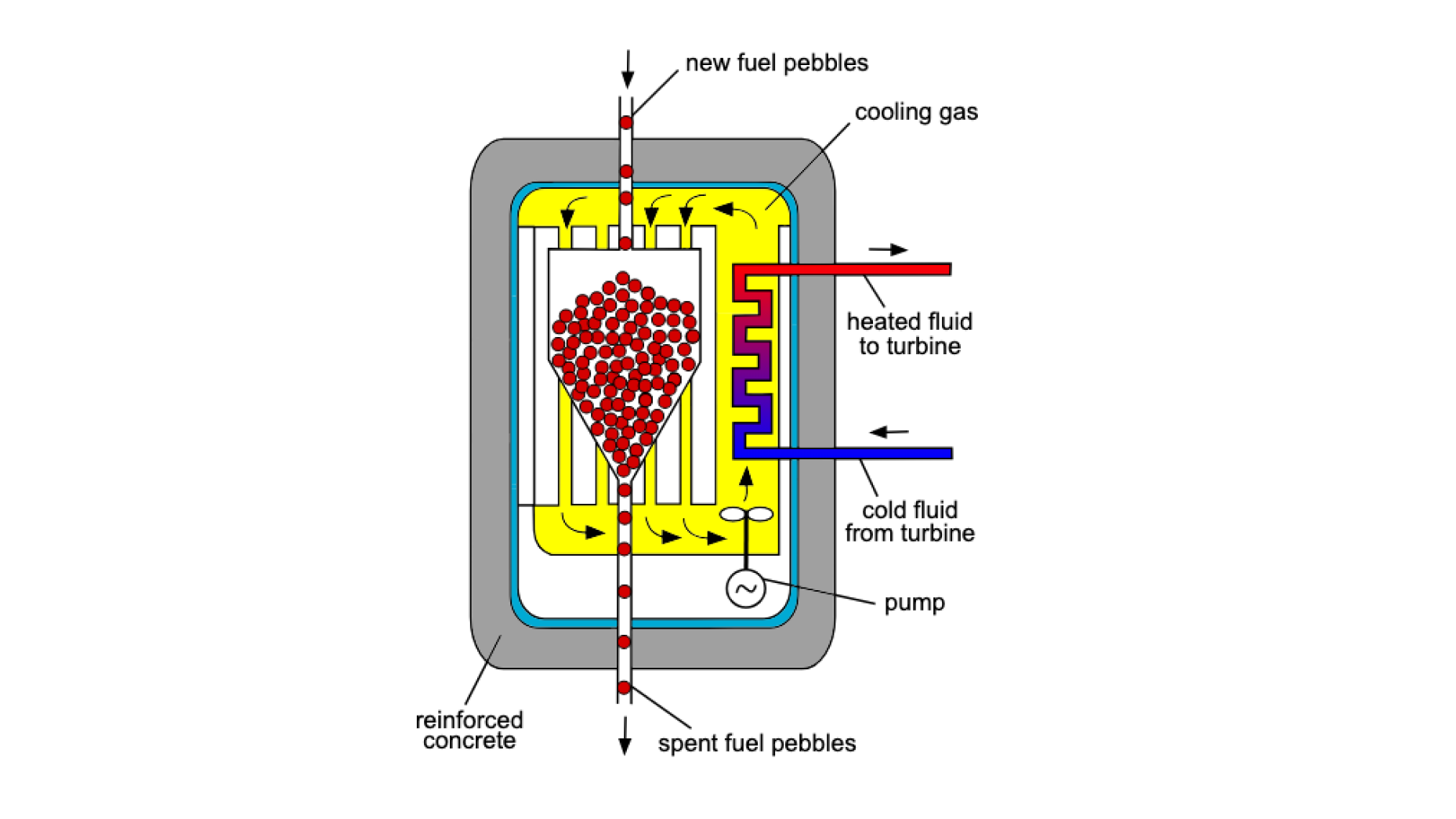

Though this reactor is planned for the future, China has already pushed the frontiers of nuclear safety with it's high-temperature reactor pebble-bed modulator (HTR-PM) that started operation in 2023.

This reactor is an SMR that uses uranium trapped in small graphite moderator cases. This makes the fuel more resistant to high temperatures, and the shells are resistant to the radioactivity of internal fission materials. Additionally, this makes it easy to refuel the reactor as old pebbles can be fed out of the bottom of the reactor core and new pebbles fed in, without the need for big refueling operations that current reactors require.

the structure of a pebble bed reactor core[13]

Instead of using water, the reactor uses helium gas, which can withstand far more heat. It's designed with components that automatically slow down when the reactor heats up, passively cooling the reactor.

Chinese researchers intentionally heated the reactor up to the point of meltdown, and the reactor automatically cooled itself down. The Chinese HTR-PM is the first commercial meltdown proof reactor ever constructed.

China

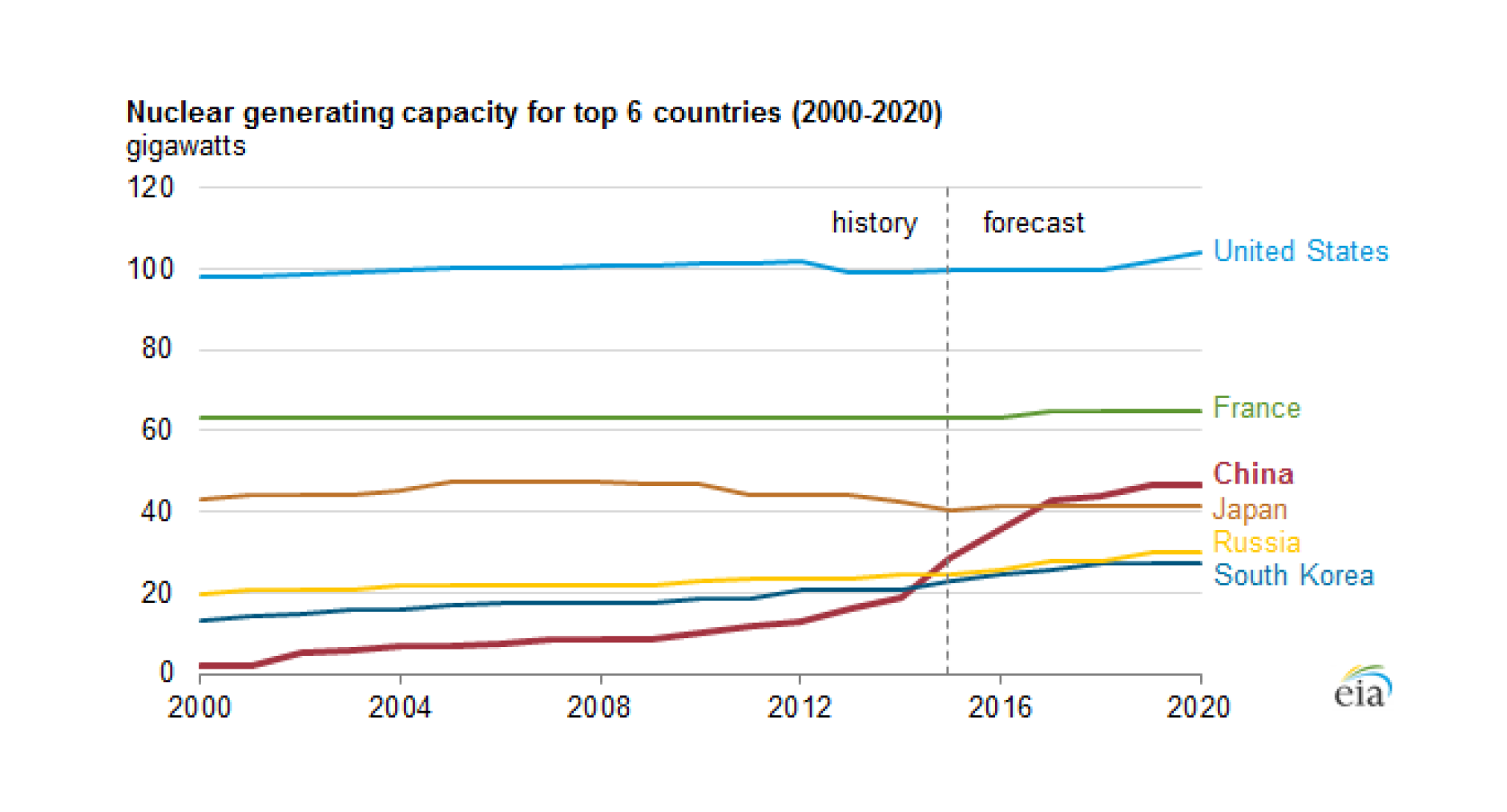

China is clearly winning the technology development competition in nuclear energy. While the US has throttled the construction of new nuclear facilities, China has risen to have the 3rd largest nuclear generation capacity in the world.

China is quickly increasing its nuclear power[14]

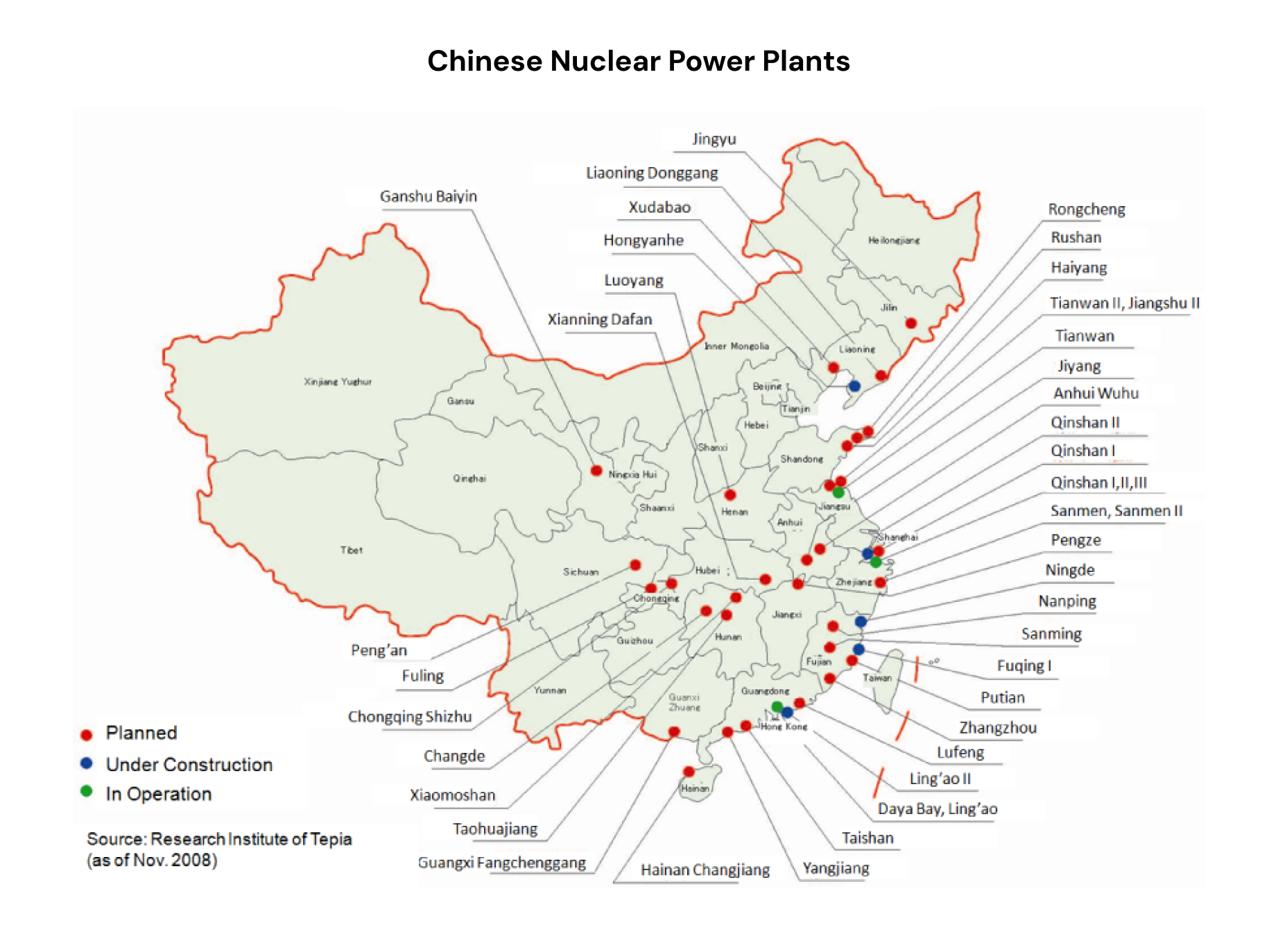

Accounting for all it's currently planned nuclear projects (pictured below), it's on track to surpass the US in total power from nuclear plants.

all current and planned Chinese nuclear projects[15]

This trend is fortunate for the development of nuclear power capacity in the US. After the big nuclear accidents, technology improvements alone are unlikely to change public sentiment toward nuclear, no matter how good they are.

However, the narrative of uniting against a common competitor may be the force that shifts this sentiment.

Over the past decade, we've already seen the US start to mobilize its own nuclear initiatives to compete with Chinese progress, including government grants for SMRs and molten-salt reactors.

However, there's still a long way to go for complete regulatory alignment and governments remain very conservative with nuclear project approvals.

For example, the military revoked its >$100 million contract with Oklo to purchase an SMR for an air force base in Alaska, and the NRC denied Oklo's plans to build an SMR in Idaho due to safety concerns.

Outlook on Nuclear

The bottom line on nuclear is that fission already works, and has a base-load power profile that makes it ideal for replacing coal.

Though nuclear has stagnated since the 1950s and still faces regulatory hurdles, recent progress in SMRs and safety-first reactor designs combined with pressure from US vs. China competition offer promising opportunities for further nuclear adoption.

Smart Grids

With the increasing strain on our grid brought by renewables and the need for grid balancing with battery storage systems, manual grid management has become challenging.

It's easy to forget about these challenges because grids work perfectly most of the time.

But big outages like the Texas blackout in 2021 that left >4.3 million people without power during a winter storm periodically remind us of the need for better solutions.

Smart grids bring automated monitoring, detection, and reaction to balance the grid in real time and maximize total system safety and efficiency.

Smart grids can be explained by 3 major shifts:

- Advanced metering and automated demand response with smart meters and home energy management systems

- Automated grid monitoring and balancing with fiber optic cables on transmission lines.

- Distributed energy production with microgrids.

First, the installation of smart meters in homes allows grid operators to measure electricity consumption changes in real-time, allowing them to more quickly respond to fluctuations in demand.

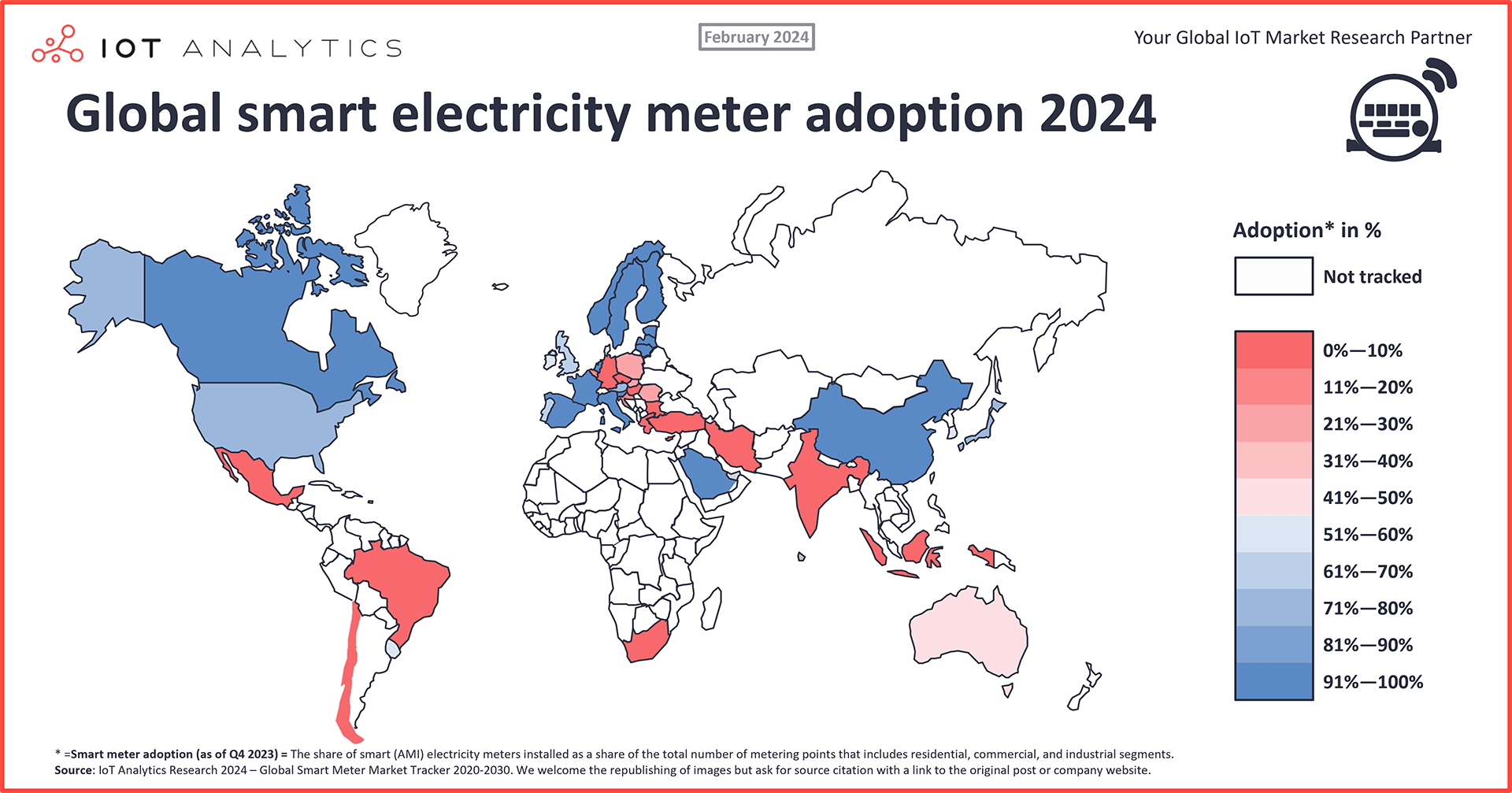

Smart meters have already been widely adopted across the world, with much of North America, Europe, and China already having smart meters on >75% of households.

global smart meter deployments[16]

Additionally, the installation of home energy management systems (HEMS) allows homes to monitor electricity prices and adjust flexible demand (like laundry that has to run overnight) to draw energy from the grid when electricity prices are cheapest.

This is known as automatic demand response (ADR). It helps to reduce demand peaks, ease the load on the grid, and save money for consumers.

In 2020, there were ~4 million HEMS systems installed in households.

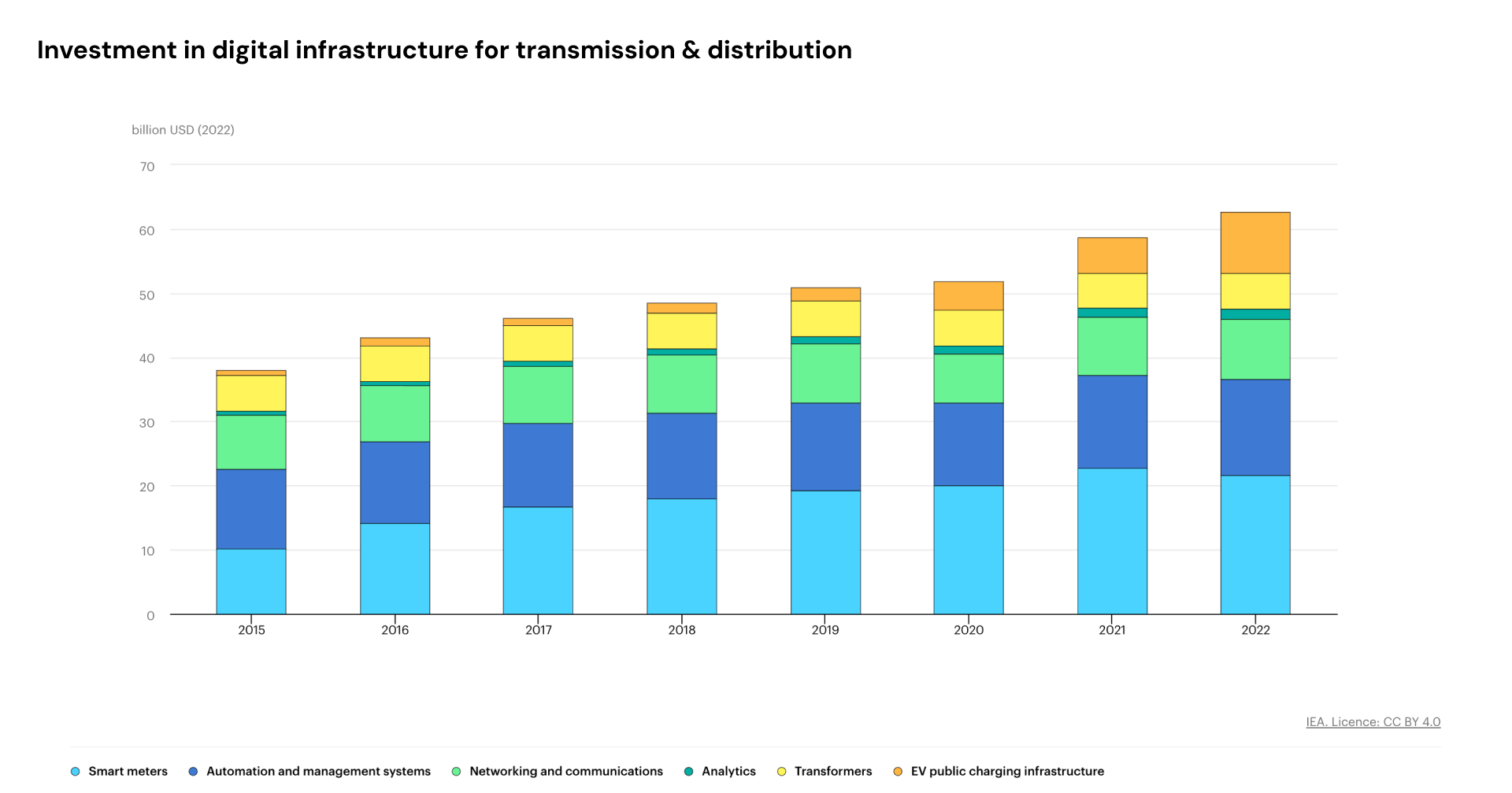

Additionally, there have been large investments in monitoring infrastructure in transmission, distribution, and storage systems to help with grid balancing.

We've started to lay fiber optic cables next to wires on transmission lines to get live updates on the function of different systems throughout the grid. This can be used to automatically detect downed lines and redirect traffic through other routes, as well as to monitor transmission & distribution capacities at different parts of the grid and detect overloaded wires.

These cables also allow grid controllers to collect live data on shifting grid demands, making it easier to deploy generation capacity.

Over 100,000 miles of fiber optics have already been laid along power lines to assist with smart grid monitoring.

Modern hybrid solar farms and battery storage setups also come with supervisory control and data acquisition (SCADA) systems that let operators view safety and output data and control charge and discharge.

In 2022, global investment in smart grid infrastructure reached >$62 billion, a >50% increase in investment since 2015.

global investment in smart grids has steadily increased[17]

Finally, the adoption of hybrid solar farms at the residential scale makes it possible for residences with excess electricity to supply their communities with power.

Because electricity travels much shorter distances if its flowing within residential communities, local energy generation is more efficient from the grid perspective because less energy is dissipated in wires.

To make this possible, local communities can form microgrids that exchange energy with each other and only draw from the grid when they need extra capacity.

Microgrids are still in their early stages of development. At the start of 2023, the US had ~4.4 GW capacity across 692 microgrids, making up ~0.3% of total US power generation.

Though it's not as flashy as the global transition in energy production systems, smart grids represent an important infrastructural shift that's already underway.

Intelligence

The recent development of rapidly improving digital intelligence has brought a new focus onto the energy industry as a fundamental constraint on progress (checkout my deep dive on deep learning for more on this).

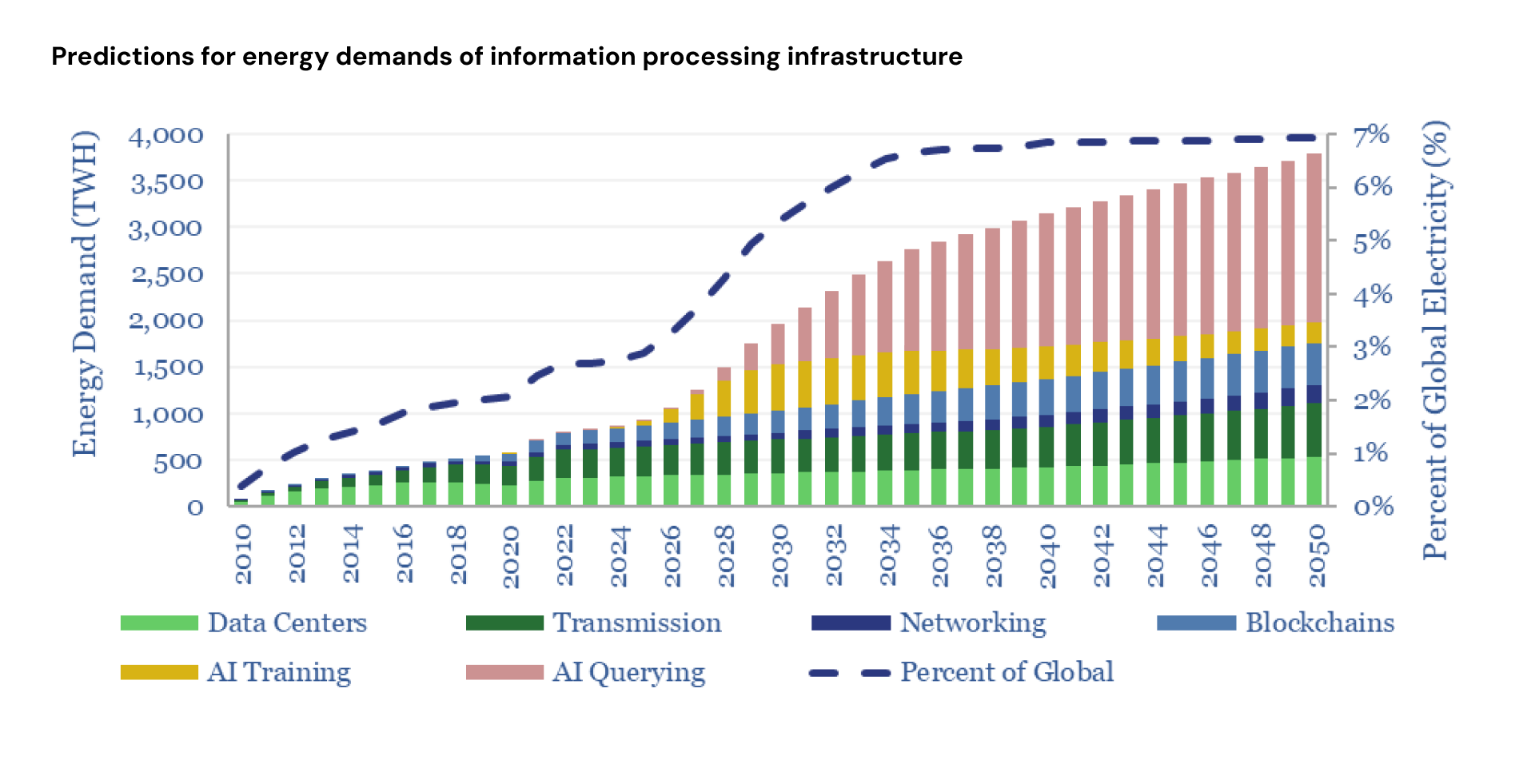

Recent projections predict that digital intelligence may grow to consume >4% of global electricity usage within the next few decades.

This would cause a >3x increase in the total energy consumption of information processing infrastructure.

an optimistic projection of increasing energy demands due to AI[18]

Training

The cost of training new AI models is primarily defined by the cost of compute in data centers used to train these models.

Given the constraints on AI model progress, whoever is able to build the largest data centers with the most compute will be able to create the most intelligent models.

This is why we see all the largest technology companies frantically building and buying massive data centers. We can already see this trend with Microsoft and OpenAI's rumored plans to build a $100 billion data center.

However, these data centers cannot be indefinitely large.

Data centers of this size have huge energy demands. AI data centers typically require ~60 kW / rack for cooling and processing.

Large data centers currently contain ~5,000 racks. But new AI data centers will want to collocate as much compute together as possible, possibly opting for 10,000-20,000+ racks in one location.

At 20,000 racks, these data centers would require 1.2 GW of power. That's more power than the output of a typical nuclear plant. This starts to get beyond the limits of the power you can draw from the grid in one location due to the capacity limits of electrical lines.

The total power you can access at the location of the data center determines the maximum size of the data center you can build.

For this reason, new data centers are opting to build their own electricity generation capacity to maximize the power available to them.

For example, Amazon spent $650 million on a data center right next to a 2.5 GW nuclear plant in March to try to purchase extra power directly from the plant.

Larry Ellison just announced that Oracle is planning to build a new data center powered by 3 SMRs.

Microsoft is also looking into SMRs to power it's new data centers.

We can see that most of these companies are particularly focused on collocating data centers with nuclear, likely due to it's high spatial energy density that maximizes the total power for data centers to draw on, as well as the fact that nuclear is a clean energy source (imagine the optics of AI being associated with destroying our environment).

Inference

The cost of running inference on old models is determined by the cost of compute plus the premium taken by AI labs.

Current frontier models with similar parameter sizes appear somewhat undifferentiated, with large labs quickly reaching feature parity with each other after new releases.

If this trend continues, individual labs may have low pricing power to increase inference costs for their models.

In this case, the cost of inference will depend on the cost of compute, with energy as the main operating cost driving the cost of compute in data centers.

This suggests that the cost of intelligence will remain closely tied to the cost of energy.

As we move toward cheaper energy sources over time like solar, the cost of intelligence could decrease in proportion to the cost of energy.

Demand for digital intelligence may become price elastic as models improve, meaning that cheaper intelligence would lead to more widespread usage of AI inference for a variety of tasks.

Then, AI would increasingly permeate society as the cost of energy decreases.

Intelligence as a Utility

Finally, digital intelligence could become a regulated utility like electricity.

A utility industry is one that [1] provides a basic necessity [2] has natural monopolies.

If current AI development trends continue, it's very likely that digital intelligence will become so fundamental to our daily function that it will qualify as a basic necessity.

However, it's unclear whether it will also become a natural monopoly industry.

Natural monopolies usually form in industries with physical infrastructure that directly serves customers, like the distribution systems in electrical grids.

This type of physical infrastructure does not exist in AI (data centers are physical infrastructure, but customers are not forced to use a data center based on proximity).

However, it's possible that training frontier AI models becomes so capital intensive that only one company is capable of continuing development.

Currently, there are arguably only 6 companies with the continuous streams of capital and talent required to keep training frontier models: Microsoft, Google, Amazon, Meta, NVIDIA, and Elon (all his companies are basically one entity with xAI/Tesla cross-pollinating).

For context, OpenAI has access to capital and resources from Microsoft, and Anthropic has access to capital and resources from Amazon.

If all these companies are able to keep up with each other and indefinitely justify investment into training frontier models, AI will remain competitive.

However, if one company is able to develop a temporarily superior model that results in significant revenue capture, they can drive this capital back into model development to stay ahead.

This could create a virtuous cycle that allows one competitor to pull ahead of the rest. At the scale of these companies, this revenue advantage would have to be on the order of tens of billions of dollars, which is larger than the size of all inference demand today.

In this case, frontier model development would become a natural monopoly, with no other companies having sufficient capital to compete.

Then, it would make sense for digital intelligence to become a utility to prevent anti-competitive behaviors.